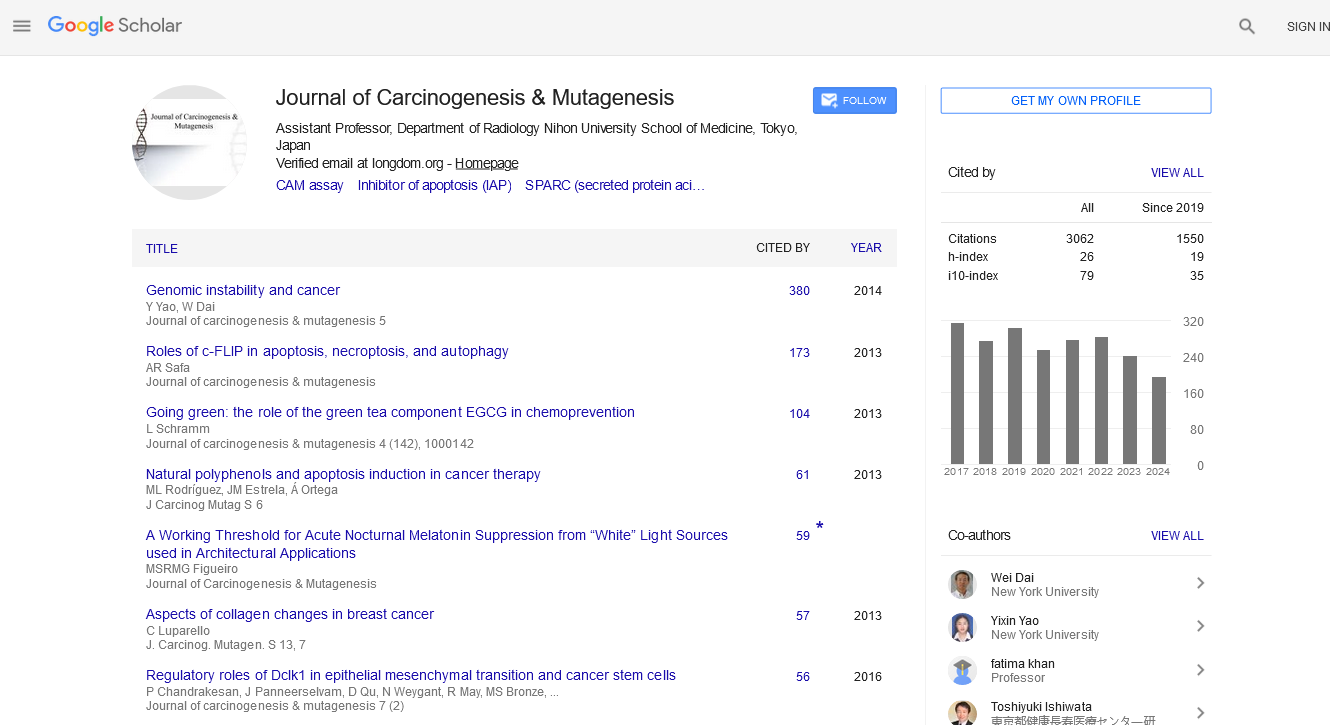

Indexed In

- Open J Gate

- Genamics JournalSeek

- JournalTOCs

- Ulrich's Periodicals Directory

- RefSeek

- Hamdard University

- EBSCO A-Z

- OCLC- WorldCat

- Publons

- Geneva Foundation for Medical Education and Research

- Euro Pub

- Google Scholar

Useful Links

Share This Page

Journal Flyer

Open Access Journals

- Agri and Aquaculture

- Biochemistry

- Bioinformatics & Systems Biology

- Business & Management

- Chemistry

- Clinical Sciences

- Engineering

- Food & Nutrition

- General Science

- Genetics & Molecular Biology

- Immunology & Microbiology

- Medical Sciences

- Neuroscience & Psychology

- Nursing & Health Care

- Pharmaceutical Sciences

Review Article - (2023) Volume 14, Issue 6

Bone Marrow Cytomorphology Cell Detection using InceptionResNetV2

Raisa Fairooz Meem* and Khandaker Tabin HasanReceived: 11-Oct-2023, Manuscript No. JCM-23-23530; Editor assigned: 13-Oct-2023, Pre QC No. JCM-23-23530 (PQ); Reviewed: 27-Oct-2023, QC No. JCM-23-23530; Revised: 03-Nov-2023, Manuscript No. JCM-23-23530 (R); Published: 13-Nov-2023, DOI: 10.35248/2157-2518.2.14.430

Abstract

Purpose: Critical decision points in the field of hematology heavily rely on the inclusion of bone marrow cytology for diagnosing haematological conditions. However, the utilization of bone marrow cytology is limited to specialized reference facilities with expert knowledge, resulting in significant inter-observer variability and time-consuming processing. These limitations can potentially lead to delayed or inaccurate diagnoses, highlighting the urgent need for state-of-the-art supporting technologies.

Methods: This research paper introduces a transfer learning model using InceptionResNetV2 specifically developed for the detection of bone marrow cells, offering a comprehensive solution to address the existing challenges in this area.

Results: The proposed model demonstrates an impressive accuracy rate of 96.19%, making it a valuable tool for analyzing medical images in this domain.

Conclusion: The success of this experiment plays an important role in future applications and advancements in the field of haematology research.

Keywords

Medical image analysis, Bone marrow cell detection, Transfer learning, InceptionResNetV2

Introduction

Analysis of bone marrow smears holds immense importance in haematological disease detection. This diagnostic procedure is employed to investigate suspected hematological issues, determine lymphoma stages, and evaluate the response of the bone marrow to chemotherapy in acute leukaemia [1]. Additionally, it is utilized in the identification of various factors in Myelodysplastic Syndrome (MDS) and Myeloproliferative Neoplasm (MPN), such as aberrant cellular morphology, excessive presence of blasts, and the overall cellularity of the specimen, among others [2].

The absence of comprehensive computerized procedures has resulted in a heavy reliance on the expertise of healthcare professionals for conducting critical analyses in this field. Compared to blood film cytology, the examination of bone marrow aspirates presents more complex cytological specimens. These aspirates contain a limited number of cytologically relevant regions, a significant amount of non-cellular debris, and a diverse array of cell types that often appear in aggregates or overlap with each other. Consequently, computational pathology challenges associated with bone cytology have proven to be quite challenging [3]. The examination and differentiation of cell morphologies in Bone Marrow (BM) play a critical role in diagnosing both malignant and nonmalignant diseases affecting the haematopoietic system. Despite the advancements in techniques such as cytogenetics, immunophenotyping, and molecular genetics, the evaluation of cytomorphology remains an essential initial step in diagnosing various diseases within and outside the bone marrow. Automating this process has proven to be challenging, resulting in human experts being primarily responsible for microscopic analysis and classifying single-cell morphology in clinical work flows. However, manual evaluation of BM smears can be time-consuming and labor-intensive, relying heavily on the examiner’s knowledge and experience, particularly in cases where the results are ambiguous. The limited availability and expertise of qualified experts impose constraints on the number of high-quality cytological examinations, and there is considerable variability in classifications both between and within examiners. Additionally, integrating this qualitative analysis of individual cell morphologies with other diagnostic techniques that offer quantitative data presents additional challenges [4].

As stated by Fan et al. [5], the expertise of a hematopathologist remains important in diagnosing haematological diseases, as there is a lack of clinical-grade solutions for bone marrow cytology. The current commercially available computational pathology workflow support does not provide the desired efficiency, highlighting the need for an affordable yet effective procedure for analyzing peripheral blood cytology. Machine learning, particularly deep learning approaches, has proven to be the most effective solution for this purpose.

Deep learning techniques such as R-CNN (Region-based Convolutional Neural Networks), Faster R-CNN, and fast R- CNN are commonly used for object detection. However, these methods have limitations in terms of computational efficiency and training difficulty due to their reliance on region proposals and subsequent object classification [3]. Although Convolutional Neural Networks (CNN) have been widely employed for image categorization, their application in medical image analysis is hindered by the scarcity of large training datasets, leading to overfitting or convergence issues [6,7]. Object categorization in bone marrow analysis poses an additional challenge, as it involves assigning individual cells or non-cellular objects to multiple discrete classes based on intricate cytological characteristics. This complexity is further amplified in cases of Morphological Dysplasia in diseases like MDS. Previous attempts to address these challenges by fine-tuning the Faster R-CNN have proven operationally inefficient and impractical for clinical diagnostic workflows. To enable effective bone marrow aspirate cytology, novel computational pathology methodologies are required. These methodologies should offer end-to-end automation, encompassing tasks from raw image processing to bone marrow cell counting and classification [3].

Transfer learning has emerged as a prominent technique in medical image analysis, capturing the attention of researchers in recent years. It is a branch of Convolutional Neural Networks (CNN) that leverages pre-initialized weights, enabling quick and accurate training on larger datasets. The key advantage of transfer learning is that instead of starting from scratch with random weights, the pre-trained weights can be applied to categorize entirely different datasets [8]. Many widely used models are trained on the ImageNet dataset, an extensive collection of annotated images designed for visual object recognition software development. While ImageNet does not own the actual images, the annotations for third-party image URLs are freely accessible. The transfer learning process involves utilizing a deep CNN model that has been pre-trained on a vast dataset. This model is further fine-tuned using a new dataset with fewer training images. The initial layers of CNN models typically learn low-level features such as edges, curves, and corners, while the later layers focus on more abstract features. In transfer learning, the remaining layers are often reconfigured for new classification tasks, while the fully connected layer, SoftMax layer, and classification output layer are modified accordingly [9]. Transfer learning has been a popular choice, particularly when dealing with limited datasets, as it eliminates the need to train models from scratch with randomly initialized weights [10].

The InceptionResNet model, a popular Transfer Learning (TL) model, was created by combining Inception and ResNet, two highly effective models. This model, developed using the ImageNet database, incorporates batch normalization in place of convolutional layer summation. Dropout layers are utilized during training to randomly set input units to 0, thus preventing overfitting. To accommodate subsequent layers that require one- dimensional data arrays, a flattening strategy is employed. The same approach is used to generate a feature vector from the output data. The model establishes a fully connected layer with a batch size of 32 and utilizes a”binary cross-entropy” loss function by connecting to the final layer of the classification model [11].

This research focuses on the analysis of a limited dataset of bone marrow smears using InceptionResNetV2 to identify cells indicative of various haematological diseases. The article is structured into five sections. The second section provides an overview of the advancements made in this field thus far. The third section outlines the methods and materials used in the study. The fourth section presents the findings of the research. Finally, the fifth section concludes with recommendations for future research in this area.

Literature Review

In the realm of medical image analysis, Convolutional Neural Networks (CNN) and Deep Learning (DL) have gained significant prominence. Researchers are increasingly employing these technologies to analyze medical images. Notably, Transfer Learning (TL) has garnered attention in this field, as it offers a solution to the challenge of requiring large training datasets. TL enables the transfer of knowledge from pre-trained models to new tasks, thereby mitigating the need for extensive training data.

To provide an up-to-date overview of the topic, Morid, Mohammad Amin et al [12], conducted a review of selected articles published after 2018. Their review focused on the application of various transfer learning models trained on ImageNet for the analysis of clinical images. According to their analysis, the choice of model depends on the specific type of images being processed. Inception models are commonly utilized for X-ray, endoscopic, or ultrasound images, while VGGNet tends to perform better for OCT or skin lesion images. Regardless of the image type or organ, the most frequently used models are Inception, VGGNet, AlexNet, and ResNet, in descending order. Only a few papers mentioned the InceptionResNet model, likely due to its novelty at the time.

The literature review conducted by Kim, Hee E, et al. also reinforced the selection of Inception and ResNet models [13]. They examined 425 articles on medical image classification utilizing transfer learning models published in 2020. The findings highlighted the efficacy of these models, particularly considering the limited availability of data. Their study focused on reducing costs and time for clinical image detection, yielding interesting insights. First, fine-tuning only the last fully connected layers of most transfer learning models yielded better results compared to starting from scratch. Seeing the convolutional layers while maintaining a low learning rate in a top-to-bottom approach enhanced the overall performance of the model. Based on their analysis of various models employed in the selected articles, Inception and ResNet models emerged as highly effective feature extractors, capable of achieving impressive accuracy while reducing computational costs and time requirements.

Faruk, Omar et al. conducted a study utilizing four different transfer learning models, namely Xception, InceptionV3, InceptionResNetV2, and MobileNetV2, for tuberculosis detection using X-ray images [11]. Each model included three levels of MaxPooling2D, four Conv2D layers, a flattened layer, two dense layers, and a ReLU activation function. Some adjustments were made to the final layers, with the thickest and last layer, SoftMax, serving as the activation layer. Customizations were implemented using layers such as average pooling, flattening, dense, and drop out. Among the four models, InceptionResNetV2 demonstrated the highest accuracy, achieving an accuracy rate of 99.36%.

Matek, Christian, et al. [4], introduced a novel model called ResNeXt for the classification of Bone Marrow Cell Morphology. ResNeXt incorporates a structural component that combines multiple transformations with the same topology. Unlike ResNet, which considers depth and width dimensions, ResNeXt introduces cardinality as an additional dimension, representing the size of the transformation set. The researchers specifically chose this model because it had been successfully applied to categorize peripheral blood smears. For their research, they selected 32 cardinality hyperparameters. They adopted a single- center approach, ensuring that all BM smears used for training were prepared in the same laboratory and digitized using consistent scanning tools. The network demonstrated promising performance in this study, with external validation indicating its ability to generalize to data collected in different settings, despite the limited amount of available data.

Yu, Ta-Chuan, et al. [1], proposed a deep CNN architecture in their study to auto-matically identify and classify Bone Marrow cells. The dataset used in their research consisted of Liu’s stained images of bone marrow smears from patients at the National Taiwan University Hospital. The proposed model incorporated various techniques such as group normalization, color shift, and Gaussian blur for tasks such as model training and data augmentation. The model achieved an impressive accuracy of 93.6%.

Rahman, Jeba Fairooz, and Mohiuddin Ahmad [14], conducted a comparative study on four transfer learning models, namely AlexNet, VGG-16, ResNet50, and DenseNet161, for the detection of Acute Myeloid Leukemia (AML), characterized by the presence of immature leukocytes in the blood and bone marrow. The researchers modified the models by employing a binary classifier and ReLU activation function to focus on detecting mature and immature leukocytes only. Among the models evaluated, AlexNet achieved the highest accuracy of 96.52% in leukocyte detection.

A significant portion of research in haematological disease analysis focuses on the detection of Acute Lymphoblastic Leukemia (ALL) cells. ALL is a type of cancer that affects the organs and tissues involved in blood production and circulation. It disrupts the normal production of healthy white blood cells, which play a crucial role in defending the body against various diseases [15].

Kumar, Deepika, et al. [16], proposed a Dense CNN model in their study to accurately identify ALL and Multiple Myeloma. They compared the performance of their model with various machine learning methods such as Support Vector Machine (SVM), VGG16, Na¨ıve Bayes, Random Forest (RF), and Decision Tree (DT). The model, trained using Adam Optimizer and consisting of multiple Convolution, Pooling, and Connected layers with the Sigmoid loss function, achieved an impressive accuracy of 97.25%.

Both Liu, Ying, et al. [17], and Ramaneswaran, S, et al. [18], employed transfer learning models for the classification of ALL cells. Liu, Ying, et al. used InceptionResNetV2 as the backbone network and implemented a two-stage deep bagging ensemble learning technique. They trained the model using bagging ensemble learning to address data imbalance and combined the outputs of two separate models to improve performance. Their model achieved an F1 score of 0.88 in cell classification. On the other hand, Ramaneswaran, S, et al. utilized InceptionV3 as the feature extractor and employed XGBoost Classifier instead of SoftMax. Their hybrid model achieved an impressive accuracy of 97.9% in classifying ALL cells.

Abir, Wahidul Hasan et al. [19], conducted a comparative study to evaluate the performance of various transfer learning models in identifying Acute Lymphoblastic Leukemia (ALL) cells. The models examined were InceptionV3, InceptionResNetV2, ResNet101V2, and VGG19. To ensure the reliability and validity of their proposed model, they employed the Local Interpretable Model-agnostic Explanation (LIME).

Algorithm and Explainable Artificial Intelligence (XAI) techniques. To address the class distribution imbalance in the dataset, they utilized a stratified k-fold cross-validation approach. The InceptionV3 model achieved the highest accuracy of 96.65% on the validation set, outperforming the other models. However, for the training set, the InceptionResNetV2 model attained the highest accuracy of 99.14%.

Tayebi, Rohollah Moosavi, et al. [3], proposed a You-Only-Look- Once (YOLO) model for automated bone marrow cytology. They developed an end-to-end system based on deep learning to detect suitable regions for cytology in digital whole-slide images of bone marrow aspirates. The model then identified and categorized each bone marrow cell within these regions. The comprehensive cytomorphological data was represented by the Histogram of Cell Types (HCT), which quantifies the probability distribution of bone marrow cell classes. The proposed system exhibited impressive accuracy in region detection, with 0.97 accuracies and 0.99 ROC AUC. For cell detection and classification, it achieved a mean average precision of 0.75, an average F1-score of 0.78, and a log-average miss rate of 0.31.

Loey, et al. [20], claimed to achieve 100% accuracy in detecting leukaemia using transfer learning models. They employed two models for the task. The first model utilized AlexNet for feature extraction and employed various classifiers, including SVM (Linear, Gaussian, and Cubic), Decision Tree (DT), Linear Discriminants (LD), and K-Nearest Neighbors (K-NN), for the classification process. Among these classifiers, the model using SVM-Cubic achieved the highest accuracy of 99.79%. The second model used AlexNet for both feature extraction and classification, achieving 100% accuracy in detecting leukaemia from blood cell image slides.

In summary, TL models are widely employed in the field of detecting brain and lung diseases, including various types of cancer. The success of these models has led researchers to explore their potential in detecting issues in other organs too. The remarkable accuracy exhibited by several models in identifying haematological diseases from Bone Marrow Cell images has motivated us to conduct further studies in this field.

Methodology

Dataset

The dataset utilized in the study conducted by Matek et al. [21], consists of a total of 171,375 cells obtained from bone marrow smears of 945 patients. These cells were stained with the May-Gru¨nwald-Giemsa/Pappenheim stain, and all identifying information has been removed. Additionally, expert annotations have been provided for each cell. The patients included in the dataset exhibit a variety of haematological conditions, which align with the typical entries found in a large laboratory that focuses on diagnosing leukaemia. The images were captured using a brightfield microscope with a 40X magnification and oil immersion. For the thesis, a randomly selected subset comprising 20% of the dataset was utilized. The images in the dataset are categorized into 21 different groups based on the specific haematological disease or aspect they represent, and these categories can be found in Table 1.

| Abbreviation | Meaning |

|---|---|

| ABE | Abnormal eosinophil |

| ART | Artefact |

| BAS | Basophil |

| BLA | Blast |

| EBO | Erythroblast |

| EOS | Eosinophil |

| FGC | Faggott cell |

| HAC | Hairy cell |

| KSC | Smudge cell |

| LYI | Immature lymphocyte |

| LYT | Lymphocyte |

| MMZ | Metamyelocyte |

| MON | Monocyte |

| MYB | Myelocyte |

| NGB | Band neutrophil |

| NGS | Segmented neutrophil |

| NIF | Not identifiable |

| OTH | Other cells |

| PEB | Proerythroblast |

| PLM | Plasma cell |

| PMO | Promyelocyte |

Table 1: List of Haematological diseases cell images of which are included in the dataset.

Evaluation criteria

In order to assess the effectiveness of the transfer learning model, a confusion matrix was employed, taking into account four possible outcomes. These outcomes include True Positive (TP), which occurs when the model accurately detects the presence of a condition. True Negative (TN) refers to cases where the model correctly identifies the absence of a characteristic. On the other hand, False Positive (FP) represents instances where the model falsely indicates the presence of a condition, and False Negative (FN) signifies situations where the model mistakenly fails to identify the existence of a condition. A comprehensive explanation of the confusion matrix can be found in Table 2.

| I/O | Output Negative | Output Positive |

|---|---|---|

| Input Positive | False Positive (FP) | True Positive (TP) |

| Input Negative | True Negative (TN) | False Negative (FN) |

Table 2: Explanation of TP, TN, FP, and FN.

To assess the output, five criteria are employed: accuracy, loss, precision, recall, and AUC.

Accuracy measures the level of correspondence between the expected and actual results and is expressed as a percentage. It is calculated by dividing the sum of true positive and true negative outcomes by the total number of possible outcomes:

Accuracy=(TP+FN)/(TP+TN+FP+FN)

Precision quantifies the degree to which predicted values agree with one another. True positives are determined by dividing the number of true positives by the sum of true positives and false positives:

Precision=TP/(TP+FP)

Recall evaluates the overall number of true positives by dividing it by the total number of true positives and false negatives:

Recall=TP/(TP+FN)

For this particular experiment, a batch size of ’32’ and only 5 epochs were selected. Google Colaboratory served as the coding platform, utilizing GPU runtime. The experiment employed the ’Categorical Loss Entropy’ method. Based on the findings of Zaheer et al. [22], the Adam optimizer, derived from ’Adaptive Moment,’ outperformed other optimizers in terms of accuracy, which is why it was chosen for this experiment [22].

Results and Discussion

The performance of InceptionResNetV2 for Bone Marrow Cells described in Table 3, indicates several findings. Upon evaluating the model on the training set, a loss of 5.7916 is observed, which indicates the overall error during the training process. A high accuracy of 96.39% suggests that the model has successfully learned to classify bone marrow cells with a notable degree of precision. The precision value of 0.6214 denotes the proportion of correctly predicted positive instances among all the positive predictions, while the recall value of 0.6171 represents the ability of the model to identify positive instances from the actual positive samples. Moreover, the AUC value of 0.8472 signifies the model’s capacity to discriminate between positive and negative samples, with a higher value indicating superior performance.

| Set | Loss | Accuracy | Precision | Recall | AUC |

|---|---|---|---|---|---|

| Training | 5.7916 | 96.39% | 0.6214 | .6171 | 0.8472 |

| Validation | 7.2734 | 96.19% | 0.6 | 0.5968 | 0.8297 |

RMSE Value=0.66286

Table 3: Evaluation of InceptionResNetV2 for bone marrow cell detection.

The evaluation of the InceptionResNetV2 model on the validation set revealed a loss of 7.2734. While this value is higher compared to the training set, it is still within acceptable limits, indicating the model’s generalization ability. The accuracy of 96.19% on the validation set further corroborates the model’s robustness in classifying bone marrow cells accurately. The precision value of 0.6 and recall value of 0.5968 demonstrate consistent performance in identifying positive instances, and the AUC value of 0.8297 indicates commendable discrimination capabilities.

The predictive accuracy of the model using the RMSE value quantifies the average magnitude of prediction errors. The low RMSE value of 0.66286 highlights the model’s ability to provide predictions that closely align with actual values, affirming its efficacy in bone marrow cell detection.

The obtained results signify the potential of the InceptionResNetV2 model for accurate bone marrow cell detection. Its high accuracy, precision, and recall on both the training and validation sets indicate robustness and generalization capabilities. Furthermore, the AUC values above 0.8 affirm the model’s capacity to effectively differentiate between positive and negative samples. The low RMSE value underscores the model’s accuracy in predicting cell characteristics, promising valuable insights for medical practitioners.

Conclusion

Transfer learning models offer several advantages in the context of biological image segmentation, including reducing the risk of false detection, simplifying complexity, minimizing human involvement, and saving time. These benefits are particularly valuable in the field of blood disorder analysis, as they can ensure that even individuals with limited resources receive competent medical care. The present study focuses on evaluating the effectiveness of the InceptionResNetV2 model in detecting bone marrow cells, a critical component of blood disorder analysis that has not received sufficient attention from researchers exploring the potential applications of transfer learning models in this domain. To gain deeper insights, additional criteria were applied to assess the model’s output. The selected model achieved an impressive accuracy rate of 96.19%. This research highlights potential avenues for future investigations, including:

• Conducting a comparative analysis of various transfer learning models to determine if any model exhibits superior performance compared to InceptionResNetV2.

• Undertaking further research to enhance the accuracy of InceptionResNetV2 in detecting bone marrow cells.

• Exploring the potential of utilizing the InceptionResNetV2 model for the detection of other bone diseases and ailments in different organs.

Declarations

Funding

No funds, grants, or other support was received for this work.

Conflict of interest

The authors declare that they have no conflict of interest in this work.

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors. No human or animal was involved in the process. The researchers worked only with publicly available medical imaging data.

Consent to participate

All the mentioned authors have made substantial contributions to the conception of the work, revised it critically for important intellectual content and agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Consent for publication

All the mentioned authors have approved the version to be published.

Availability of data and materials

The data and materials involved in this study have been collected from publicly available sources. The dataset analysed during the current study is available in The Cancer Imaging Archive repository, https://doi.org/10.7937/TCIA.AXH3-T579

Code availability

Codes can be provided upon request.

Authors’ contributions

All authors contributed to the study conception and design. All authors read and approved the final manuscript.

References

- Yu TC, Chou, WC, Yeh, C-Y, Yang, et al. Automatic bone marrow cell identification and classification by deep neural network. Blood. 2019;134:2084.

- Bru¨ck OE, Lallukka-Bruck SE, Hohtari HR, Ianevski A, Ebeling FT, Kovanen PE, et al. Machine learning of bone marrow histopathology identifies genetic and clinical determinants in patients with mds. Blood Cancer Discov. 2021;2(3):238-249.

[Crossref] [Google Scholar] [PubMed]

- Tayebi RM, Mu Y, Dehkharghanian T, Ross C, Sur M, Foley R, et al. Automated bone marrow cytology using deep learning to generate a histogram of cell types. Commun Med. 2022;2(1):45.

[Crossref] [Google Scholar] [PubMed]

- Matek C, Krappe S, Mu nzenmayer C, Haferlach T, Marr C. Highly accurate differentiation of bone marrow cell morphologies using deep neural networks on a large image data set. Blood. 2021;138(20):1917-1927.

[Crossref] [Google Scholar] [PubMed]

- Fan J, Lee J, Lee Y. A transfer learning architecture based on a support vector machine for histopathology image classification. Applied Sci. 2021;11(14):6380.

- Mujahid M, Rustam F, Alvarez R, Luis Vidal Mazon J, Dıez IdlT, Ashraf I. Pneumonia classification from x-ray images with inception-v3 and convolutional neural network. Diagnostics. 2022;12(5):1280.

[Crossref] [Google Scholar] [PubMed]

- Swati ZNK, Zhao Q, Kabir M, Ali F, Ali Z, Ahmed S, et al. Brain tumor classification for mr images using transfer learning and fine-tuning. Comput Med Imaging Graph. 2019;75:34-46.

[Crossref] [Google Scholar] [PubMed]

- Kandel I, Castelli M. Transfer learning with convolutional neural networks for diabetic retinopathy image classification. A review. Applied Sci. 2020;10(6):2021.

- Deniz E, Sengur A, Kadiroglu Z, Guo Y, Bajaj V, Budak U. Transfer learning based histopathologic image classification for breast cancer detection. Health Inf Sci Syst. 2018;6:1-7.

[Crossref] [Google Scholar] [PubMed]

- Hussain M, Bird JJ, Faria DR. A study on CNN transfer learning for image classification. Adv Comp Intel Sys. 2018;5-7.

- Faruk O, Ahmed E, Ahmed S, Tabassum A, Tazin T, Bourouis S, et al. A novel and robust approach to detect tuberculosis using transfer learning. J Healthc Eng. 2021.

[Crossref] [Google Scholar] [PubMed]

- Morid MA, Borjali A, Del Fiol G. A scoping review of transfer learning research on medical image analysis using imagenet. Comput Biol Med. 2021;128:104115.

[Crossref] [Google Scholar] [PubMed]

- Kim HE, Cosa-Linan A, Santhanam N, Jannesari M, Maro ME, Gans-landt T. Transfer learning for medical image classification: a literature review. BMC Med Imaging. 2022;22(1):69.

[Crossref] [Google Scholar] [PubMed]

- Rahman JF, Ahmad M. Detection of acute myeloid leukemia from periph- eral blood smear images using transfer learning in modified cnn architectures. Proceedings of International Conference on Information and Communication Technology for Developmen. 2023;447-459. Springer.

- Afta MO, Awan MJ, Khalid S, Javed R, Shabir H. Executing spark bigdl for leukemia detection from microscopic images using transfer learning. IEEE. 2021;216–220.

- Kumar D, Jain N, Khurana A, Mittal S, Satapathy SC, Senkerik R, et al. Automatic detection of white blood cancer from bone mar- row microscopic images using convolutional neural networks. IEEE. 2020; 8:142521-142531.

- Liu Y, Long F. Acute lymphoblastic leukemia cells image analysis with deep bagging ensemble learning. Springer. 2019;113–121.

- Ramaneswaran S, Srinivasan K, Vincent PDR., Chang CY. Hybrid inception v3 xgboost model for acute lymphoblastic leukemia classification. Computation Math Method Med. 2021;1-10.

- Abir WH, Uddin MF, Khanam FR, Tazin T, Khan MM, Masud M, et al. Explainable ai in diagnosing and anticipating leukemia using transfer learning method. Comput Intell Neurosci 2022.

[Crossref] [Google Scholar] [PubMed]

- Loey M, Naman M, Zayed H. Deep transfer learning in diagnosing leukemia in blood cells. Computers. 2020;9(2):29.

- Matek C, Krappe S, Mu¨nzenmayer C, Haferlach T, Marr C. An expert- annotated dataset of bone marrow cytology in hematologic malignancies [data set]. Cancer Imaging Arch (2021).

[Crossref]

- Zaheer R, Shaziya H. A study of the optimization algorithms in deep learn-ing. IEEE. 2019;536-539.

Citation: Meem RF (2023) Bone Marrow Cytomorphology Cell Detection using InceptionResNetV2. J Carcinog Mutagen. 14:430.

Copyright: © 2023 Meem RF. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.